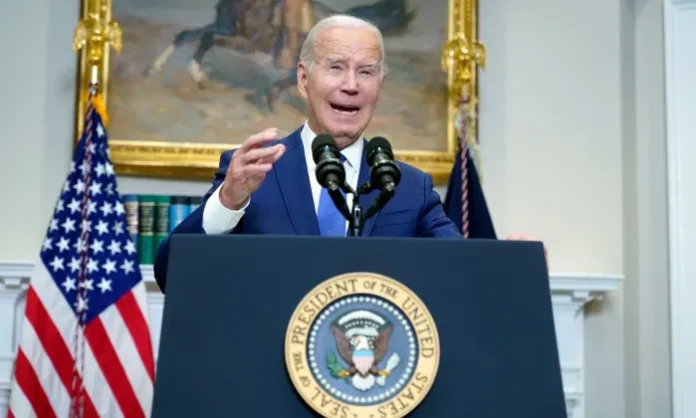

Meta’s Oversight Board recently faced scrutiny over its decision to allow a manipulated video of President Joe Biden to remain on Facebook. The video in question, edited to misrepresent an interaction between Biden and his granddaughter, was criticized for potentially misleading viewers. Despite the controversy, the Oversight Board concluded that the video did not violate Meta’s manipulated media policy because it was not created with AI tools and the edits were deemed “obvious” enough not to mislead most users. This decision has sparked a broader discussion on the adequacy of Meta’s policies in addressing manipulated content, especially with the approach of significant elections around the globe.

The Oversight Board criticized Meta’s current rules as “incoherent” and inadequately focused on the method of content creation rather than the potential harm it could cause, such as electoral interference. It recommended that Meta revise its policies to encompass all forms of manipulated media, regardless of how they are produced, and to prioritize labeling over removal to provide users with context without necessarily censoring content. The Board’s recommendations aim to better combat election misinformation and adapt to the evolving landscape of digital content manipulation.

This case highlights the challenges social media platforms face in balancing freedom of expression with the need to prevent misinformation. As AI and digital editing technologies become more sophisticated, the task of distinguishing harmful content from harmless expression becomes increasingly complex. Meta’s response to the Oversight Board’s recommendations and the subsequent impact on its content moderation policies will be closely watched, especially as the world enters a year filled with critical elections.